Part 11: Your Poetry is Served

This continues the introduction started here. You can find an index to the entire series here.

A Twisted Poetry Server

Now that we’ve learned so much about writing clients with Twisted, let’s turn around and re-implement our poetry server with Twisted too. And thanks to the generality of Twisted’s abstractions, it turns out we’ve already learned almost everything we need to know. Take a look at our Twisted poetry server located in twisted-server-1/fastpoetry.py. It’s called fastpoetry because this server sends the poetry as fast as possible, without any delays at all. Note there’s significantly less code than in the client!

Let’s take the pieces of the server one at a time. First, the PoetryProtocol:

class PoetryProtocol(Protocol):

def connectionMade(self):

self.transport.write(self.factory.poem)

self.transport.loseConnection()

Like the client, the server uses a separate Protocol instance to manage each different connection (in this case, connections that clients make to the server). Here the Protocol is implementing the server-side portion of our poetry protocol. Since our wire protocol is strictly one-way, the server’s Protocol instance only needs to be concerned with sending data. If you recall, our wire protocol requires the server to start sending the poem immediately after the connection is made, so we implement the connectionMade method, a callback that is invoked after a Protocol instance is connected to a Transport.

Our method tells the Transport to do two things: send the entire text of the poem (self.transport.write) and close the connection (self.transport.loseConnection). Of course, both of those operations are asynchronous. So the call to write() really means “eventually send all this data to the client” and the call to loseConnection() really means “close this connection once all the data I’ve asked you to write has been written”.

As you can see, the Protocol retrieves the text of the poem from the Factory, so let’s look at that next:

class PoetryFactory(ServerFactory):

protocol = PoetryProtocol

def __init__(self, poem):

self.poem = poem

Now that’s pretty darn simple. Our factory’s only real job, besides making PoetryProtocol instances on demand, is storing the poem that each PoetryProtocol sends to a client.

Notice that we are sub-classing ServerFactory instead of ClientFactory. Since our server is passively listening for connections instead of actively making them, we don’t need the extra methods ClientFactory provides. How can we be sure of that? Because we are using the listenTCP reactor method and the documentation for that method explains that the factory argument should be an instance of ServerFactory.

Here’s the main function where we call listenTCP:

def main():

options, poetry_file = parse_args()

poem = open(poetry_file).read()

factory = PoetryFactory(poem)

from twisted.internet import reactor

port = reactor.listenTCP(options.port or 0, factory,

interface=options.iface)

print 'Serving %s on %s.' % (poetry_file, port.getHost())

reactor.run()

It basically does three things:

- Read the text of the poem we are going to serve.

- Create a

PoetryFactorywith that poem. - Use

listenTCPto tell Twisted to listen for connections on a port, and use our factory to make the protocol instances for each new connection.

After that, the only thing left to do is tell the reactor to start running the loop. You can use any of our previous poetry clients (or just netcat) to test out the server.

Discussion

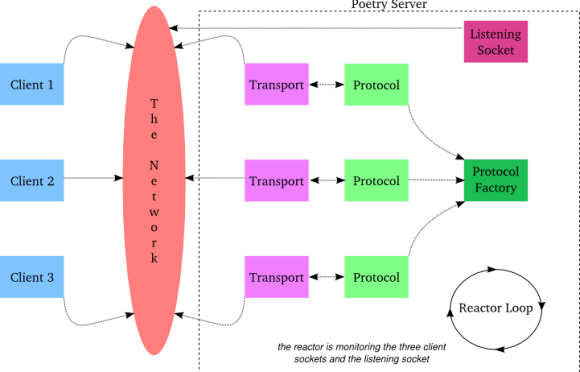

Recall Figure 8 and Figure 9 from Part 5. Those figures illustrated how a new Protocol instance is created and initialized after Twisted makes a new connection on our behalf. It turns out the same mechanism is used when Twisted accepts a new incoming connection on a port we are listening on. That’s why both connectTCP and listenTCP require factory arguments.

One thing we didn’t show in Figure 9 is that the connectionMade callback is also called as part of Protocol initialization. This happens no matter what, but we didn’t need to use it in the client code. And the Protocol methods that we did use in the client aren’t used in the server’s implementation. So if we wanted to, we could make a shared library with a single PoetryProtocol that works for both clients and servers. That’s actually the way things are typically done in Twisted itself. For example, the NetstringReceiver Protocol can both read and write netstrings from and to a Transport.

We skipped writing a low-level version of our server, but let’s think about what sort of things are going on under the hood. First, calling listenTCP tells Twisted to create a listening socket and add it to the event loop. An “event” on a listening socket doesn’t mean there is data to read; instead it means there is a client waiting to connect to us.

Twisted will automatically accept incoming connection requests, thus creating a new client socket that links the server directly to an individual client. That client socket is also added to the event loop, and Twisted creates a new Transport and (via the PoetryFactory) a new PoetryProtocol instance to service that specific client. So the Protocol instances are always connected to client sockets, never to the listening socket.

We can visualize all of this in Figure 26:

In the figure there are three clients currently connected to the poetry server. Each Transport represents a single client socket, and the listening socket makes a total of four file descriptors for the select loop to monitor. When a client is disconnected the associated Transport and PoetryProtocol will be dereferenced and garbage-collected (assuming we haven’t stashed a reference to one of them somewhere, a practice we should avoid to prevent memory leaks). The PoetryFactory, meanwhile, will stick around as long as we keep listening for new connections which, in our poetry server, is forever. Like the beauty of poetry. Or something. At any rate, Figure 26 certainly cuts a fine figure of a Figure, doesn’t it?

The client sockets and their associated Python objects won’t live very long if the poem we are serving is relatively short. But with a large poem and a really busy poetry server we could end up with hundreds or thousands of simultaneous clients. And that’s OK — Twisted has no built-in limits on the number of connections it can handle. Of course, as you increase the load on any server, at some point you will find it cannot keep up or some internal OS limit is reached. For highly-loaded servers, careful measurement and testing is the order of the day.

Twisted also imposes no limit on the number of ports we can listen on. In fact, a single Twisted process could listen on dozens of ports and provide a different service on each one (by using a different factory class for each listenTCP call). And with careful design, whether you provide multiple services with a single Twisted process or several is a decision you could potentially even postpone to the deployment phase.

There’s a couple things our server is missing. First of all, it doesn’t generate any logs that might help us debug problems or analyze our network traffic. Furthermore, the server doesn’t run as a daemon, making it vulnerable to death by accidental Ctrl-C (or just logging out). We’ll fix both those problems in a future Part but first, in Part 12, we’ll write another server to perform poetry transformation.

Suggested Exercises

- Write an asynchronous poetry server without using Twisted, like we did for the client in Part 2. Note that listening sockets need to be monitored for reading and a “readable” listening socket means we can

accepta new client socket. - Write a low-level asynchronous poetry server using Twisted, but without using

listenTCPor protocols, transports, and factories, like we did for the client in Part 4. So you’ll still be making your own sockets, but you can use the Twisted reactor instead of your ownselectloop. - Make the high-level version of the Twisted poetry server a “slow server” by using

callLaterorLoopingCallto make multiple calls totransport.write(). Add the --num-bytes and --delay command line options supported by the blocking server. Don’t forget to handle the case where the client disconnects before receiving the whole poem. - Extend the high-level Twisted server so it can serve multiple poems (on different ports).

- What are some reasons to serve multiple services from the same Twisted process? What are some reasons not to?

21 replies on “Your Poetry is Served”

Good fresh part done! I like figure explaining connections to server 🙂

I still have questions 🙂

How does the Factory (or Reactor loop?) distinct between Protocols? Do they have names with client port number in it?

What if Protocol have things to do after client disconnect? Will be instance destroyed immediately after disconnect?

Thanks again for great tutorial for the rest of us!

Good questions!

In the server in Part 11, the factory does not distinguish between protocols, it just makes a new (identical) protocol for each new connection via

buildProtocol.Each protocol is connected to a different Transport, but that happens outside of the factory. Note that

buildProtocolis passed the address of the incoming connection, so it could potentially take actions based on that information, but most of the time you want to do the same thing for every client no matter where they might be coming from.Anyway, once the protocol has been created, it’s really in charge of the specific connection, not the factory.

When a connection is done, the associated protocol receives a

connectionLostcallback, where you can take any cleanup actions you need to.Thanks! That is useful information!

“Like the client, the server uses a Protocol instance to manage connections (…)”

Shouldn’t that be “instances” instead of “instance”. Current wording suggests there’s one instance of Protocol handling all connections.

Good point, I updated the wording to make that clear.

[…] 原文:http://krondo69349291.wpcomstaging.com/blog/?p=2048 作者:dave 译者:notedit 时间:2011.06.23 […]

Hi, Dave! Thank you for such interesting and useful articles! May you help me in one question? I wrote asynchronous non-twisted poetry server (as specified in the first exercise in part 11), this server you be can seen here: http://pastebin.com/G2HHrwWQ. I embarrassed lines 61-62: this is a blocking operations. If to this server connected 2 clients (you can use any of poetry clients, for example twisted-client-4/get-poetry.py) then download speed for each client cut in half (if to server connected 3 clients, then speed cut to 3 times). How do you recommend to replace this code, that it worked equally quickly regardless of the number of clients?

Hi Roman, you’re on the right track using a select loop. In addition, you need to:

1. Set the accepted sockets to be non-blocking.

2. Use send() instead of sendall(). Remember that send may not write all the bytes. It returns the number actually written.

3. Catch socket errors from send() with the EWOULDBLOCK code and ignore them (proceed to the next descriptor from select).

Make sense?

Thank you, Dave.

Hi Dave,

How would you test EWOULDBLOCK on the server in this case? I tried using time.sleep on my client to try but it didn’t trigger it. I’m guessing there is a buffer somewhere that is handling everything smoothly. Thanks for the tutorials.

Hey Phil, you are welcome! I think you’re right about the buffering. I’d try using a much larger amount of data to trigger that case.

Thanks. I did use a larger file and stopped the client from receiving data to trigger it. I did find some peculiar behavior that I think is Windows only. I’ve written about it below if anyone is interested. If anyone finds anything incorrect with what I’ve said, please let me know.

Initially, I was just curious how I would trigger a ‘WOULD BLOCK’ socket.error on a server send. To do this, I found the best way was to stop the client code from ever trying to read the data (i.e. from using recv()) so that the send buffer of the server would fill up (presumably requiring the receive buffer on the client to also fill up). A larger file than the poetry ones had to be used.

I found that on my system (using Windows 7), about 23.3KB worth of small send() commands would then cause a ‘EWOULDBLOCK’ error (presumably that fills both the receive and send buffers on the client and server respectively). However, I also found that I could send a very large file (1.5GB) in one send command() and the (non-blocking) send would return immediately, indicating that ALL the data was sent.

My memory increased by the same amount, though not attributed to any application. The client however would, for a long time afterwards, still indicate that it was receiving data even if the server was closed. The extra memory usage would only subside once the client was closed or the data was transferred. After ballooning to 1.5GB buffer size, if I tried to make another request from a different client, the server would only then trigger EWOULDBLOCK or something similar.

I found a description of why this was happening on some Microsoft support documentation

https://support.microsoft.com/en-us/help/214397/design-issues-sending-small-data-segments-over-tcp-with-winsock#section-2

First, note that data goes to a send buffer SO_SNDBUF first before it actually gets in transit on the network. This buffer and the receive buffer (SO_RCVFUV) are managed by the OS. On Windows at least, a successful send() doesn’t actually mean that the data is now in transit on the network but instead means it has just arrived at the send buffer. The default Winsock (Windows Sockets API) buffer size is only 8K.

One would expected EWOULDBLOCK to occur if the send buffer is exceeded and would only stop when the buffer has free space. However, the Winsock send buffer can actually expand much larger (like 1.5GB in my case) in various circumstances.

So, what actually happened was that the whole 1.5GB file went into the send buffer which expanded to accommodate it. Winsock said success to the send() command but actually it was lying. It was hoarding the data in the send buffer.

In conclusion, Winsock lies.

Nice sleuthing 🙂

Hi,dave! Thank you for such nice tutorials!

would you please help in some questions?

1) Please imaging an application such as file-server: a client send a request like ‘get filename1’, the file server answer the request by returning content of filename1. the server is twisted-style, but as far as i know, reading a file from disk is time-consuming. when the server is transferring file to one client, it couldn’t answer requests from other client. How could I solve this problem? could I read a local file in asynchronous way or …?

2) could you please send me some small programs in twisted-style with GUI (or some links)? what confused me is that, twisted is a event circle running forever, and GUI is also a event circle, how to combine this two circle?I guess callback(using Deffered in twisted) should play a important role.

thank you very much! (I am not a english speaker,my written english is little bit bad (^^)

Hello, glad you like the tutorials!

Thank you very much

[…] Your Poetry is Served […]

Hi Dave,

I’m having a lot of trouble figuring out how I can implement the second exercise. The part I am getting confused by is how to get the accept to work as part of the reactor. The client from Part 4 is very different from the server in this respect. Would I have accept() run in doRead? Thanks.

Hi Kevin, you’ll need to look at the Twisted reactor API. Look for the methods that allow you to add a new socket to the reactor.

Part 11 is at http://krondo69349291.wpcomstaging.com/blog/?p=2048 where 2048=2^11, which is undeniably cool!!

[…] 本部分原作参见: dave @ http://krondo69349291.wpcomstaging.com/?p=2048 […]